15 September 2020

This is the third part of a 5-part series on AWS exploits and similar findings discovered over the course of 2020. All findings discussed in this series have been disclosed to the AWS security team and had patches rolled out to all affected regions, where necessary. A big thanks to my friend and fellow Australian Aidan Steele for co-authoring this series with me. Check out parts 2 and 4 for his work!

This part of the Security September series reports on a security issue that comes as a result of a timing attack against the internal process which CloudWatch Synthetics performs when creating a canary, which leads to the ability to change some settings of the canary you’d otherwise not be able to achieve due to the immutability of Lambda versions.

The Synthetics feature of the CloudWatch service allows developers to monitor application endpoints by emulating customer behaviour using Puppeteer, a browser automation framework. Each request to monitor a specific endpoint is referred to as a canary, and is accompanied by user-specified code to target specific features within a HTTP endpoint.

To achieve this, the service creates a Lambda function within the developers account that is periodically invoked to execute the tests. The Lambda features both the user-specified code, as well as a predefined Lambda layer that holds the Puppeteer library and other helper functions. The Lambda layer is publicly accessible and hold some neat secrets.

When I issue a request to create a canary, the service will assume the provided role (or create it), create the Lambda function within my account, publish version 1, and then reference that version for all requests for canary to run in the future. In doing this, the service is ensuring that the user-generated code as well as the predefined Lambda layer is not altered during its lifetime.

However, in the timeframe between creating the Lambda and publishing the version, I can make modifications to the Lambda configuration which would be published in version 1. Normally, this is near impossible and would possibly require an intentional rate limit exhaustion to slow the call down, however I can set VPC options on the canary that creates an ENI, which the internal process polls for completion every 5 seconds. This means I have a 0-5 second window to perform the actions required.

The result of the issue is that I can create a canary which consumes the maximum amount of Lambda memory, currently 3008MB, which is 3x the usual static allocation of 1000MB. We can also use this attack to increase the time limit to 15 minutes or, for example, change the entire base layer to different runtimes.

UPDATE: Since drafting this post, the ability to change the Lambda memory allocation has been made available as a feature. Time limit and runtime changes are still not supported.

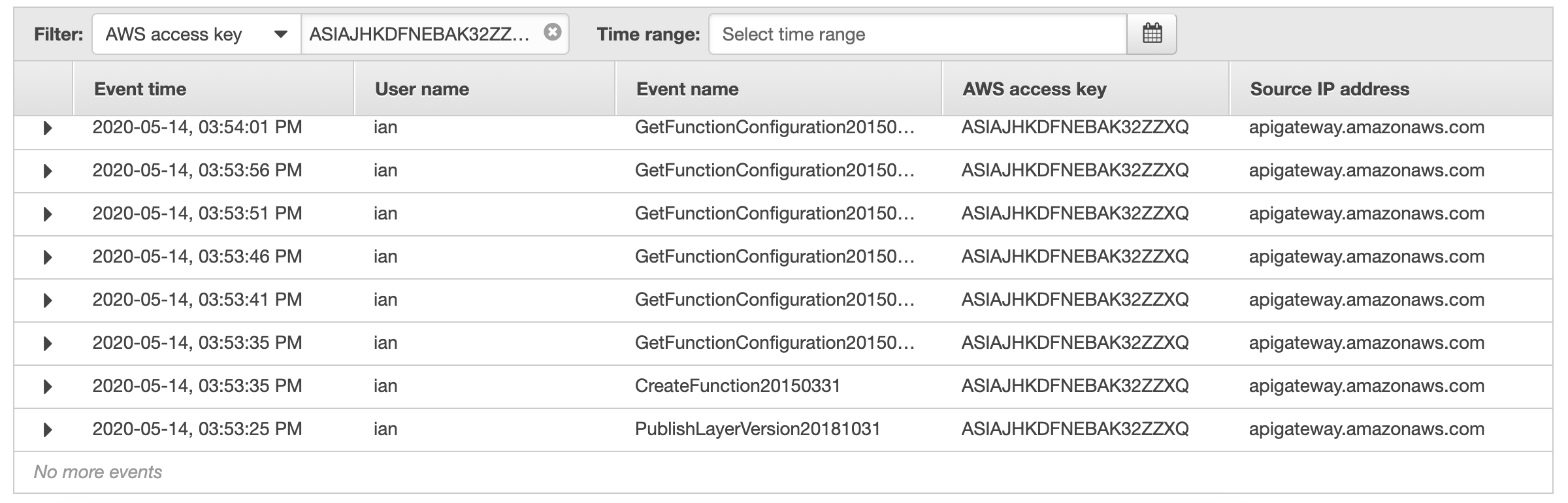

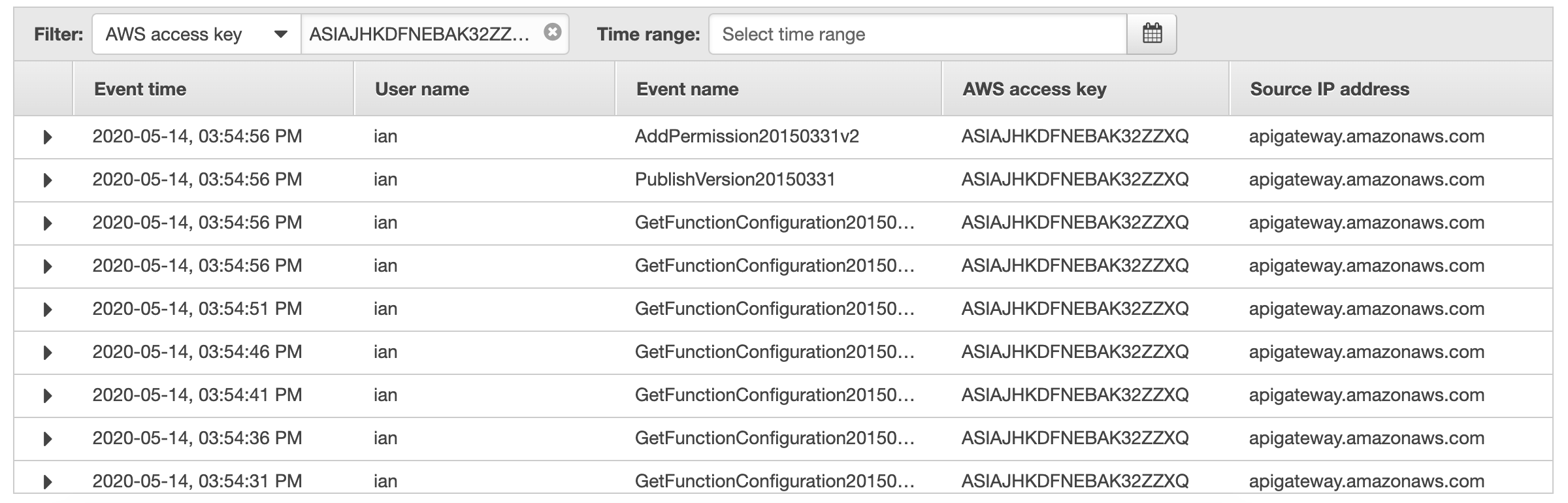

The CloudWatch Synthetics process (shown here as having a Source IP of apigateway.amazonaws.com), creates the function at 03:53:35PM. It then enters the 5-second loop waiting for Lambda to provision ENIs in the VPC.

At approx. 03:54:52PM (+/- 1 second), the function creation is completed. At this point, the last (failed) check was at 03:54:51PM.

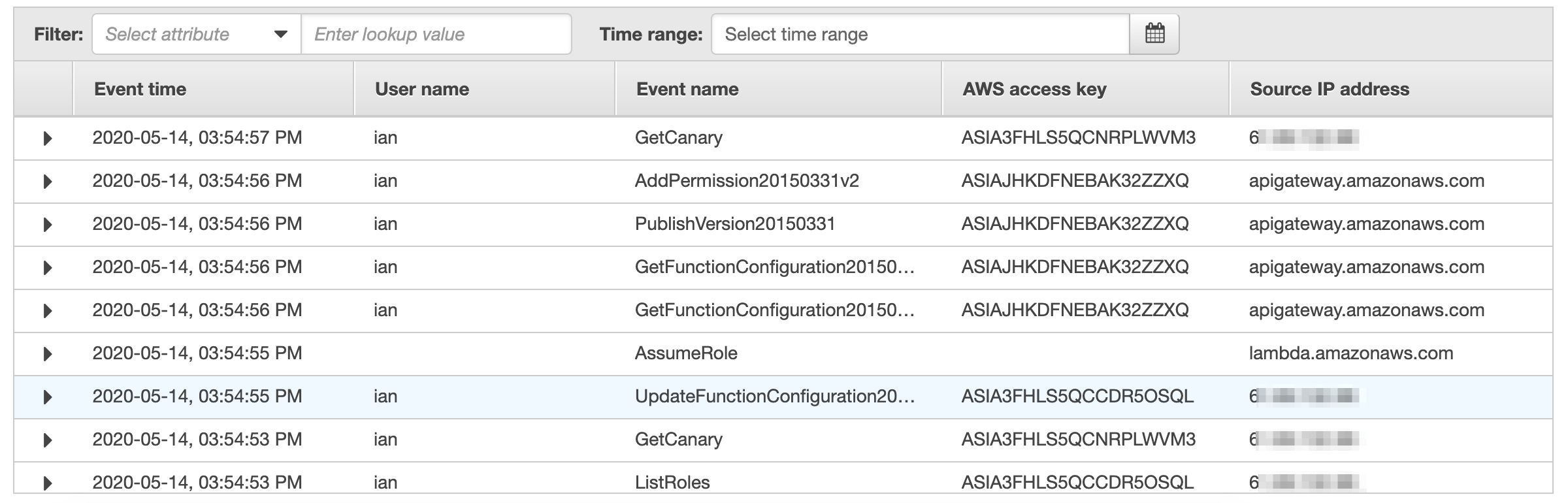

At 03:54:53PM I verify the Lambda creation (i.e. ENI allocation) has completed and at 03:54:55PM I perform a change to increase the memory to the maximum 3008MB (note I could do any change here - memory is just an example). At 03:54:56PM, the CloudWatch Synthetics process verifies the function is completed and publishes version 1, which includes my change.

I raised the issue directly with the AWS security team, who got back to me within a day.

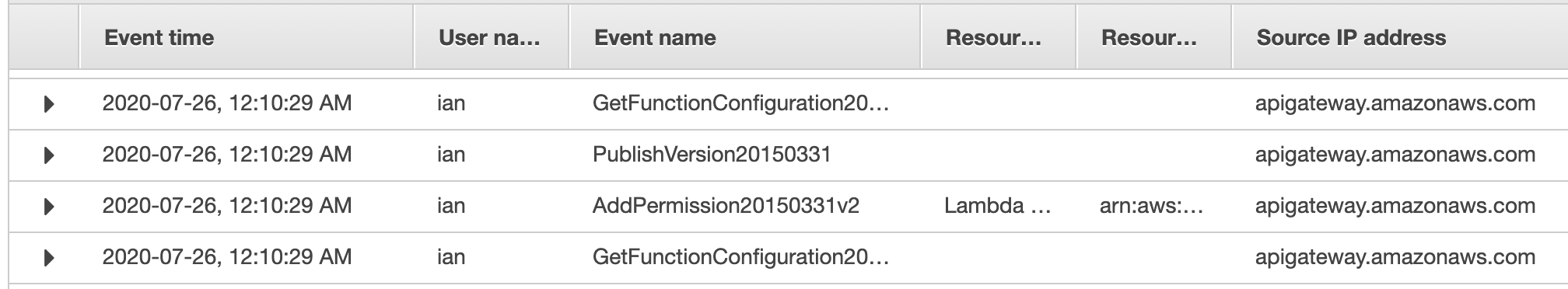

After a few weeks, the team got back to be confirming they had patched out the ability to make this change. Now, immediately (within a second) before publishing the Lambda version, there is a check against the Lambda configuration to determine if alterations have been made:

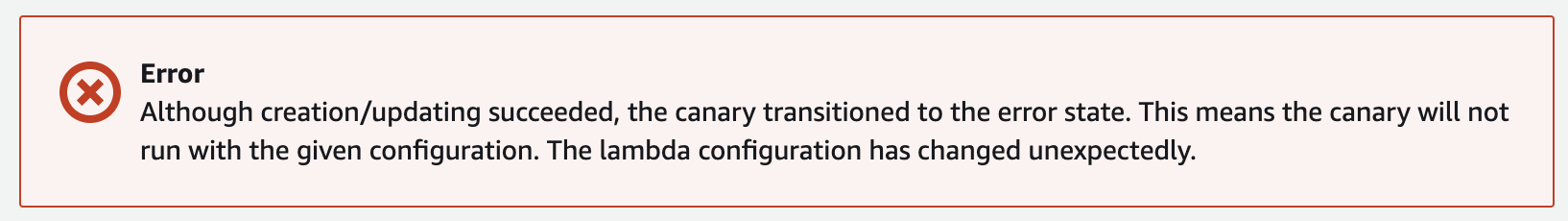

If the service detects an alteration has indeed been made, the service will reject the completion of the canary deployment:

Atomicity is still a difficult issue in tech. After seeing the way this service is structured, my belief is that the AWS engineers who worked on it were aware of the potential for this issue to be exploited and (correctly) decided that the risk and consequence was low enough not to warrant specific measures to protect against alterations. Even with the low consequence, the team took the time to ensure that the service could not be targetted in this way moving forward.

Thanks to the AWS security and CloudWatch Synthetics team members who worked with me to help remediate this issue.